NODE OPERATORS Overview Running a Chainlink Node Fulfilling Requests Run an Ethereum Client Performing System Maintenance Connecting to a Remote Database Configuration Variables Enabling HTTPS Connections Best Security and Operating Practices Best Practices for Nodes on AWS Miscellaneous

ORACLE JOBS Migrating to v2 Jobs Jobs Cron Direct Request Flux Monitor Keeper Off-chain Reporting Webhook Tasks HTTP Bridge JSON Parse CBOR Parse ETH ABI Decode ETH ABI Decode Log ETH ABI Encode ETH Call ETH Tx Multiply Divide Any Mean Median Mode Sum

Job Pipelines

Writing pipelines

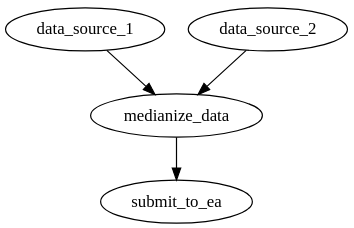

Pipelines are composed of tasks arranged in a DAG (directed acyclic graph) expressed in DOT syntax:

DOT (graph description language) - Wikipedia

Each node in the graph is a task, and has both a user-specified ID as well as a set of configuration parameters/attributes:

my_fetch_task [type="http" method="get" url="https://chain.link/eth_usd"]

The edges between tasks define how data flows from one task to the next. Some tasks can have multiple inputs (such as median), while others are limited to 0 (http) or 1 (jsonparse).

data_source_1 [type="http" method="get" url="https://chain.link/eth_usd"]

data_source_2 [type="http" method="get" url="https://coingecko.com/eth_usd"]

medianize_data [type="median"]

submit_to_ea [type="bridge" name="my_bridge"]

data_source_1 -> medianize_data

data_source_2 -> medianize_data

medianize_data -> submit_to_ea